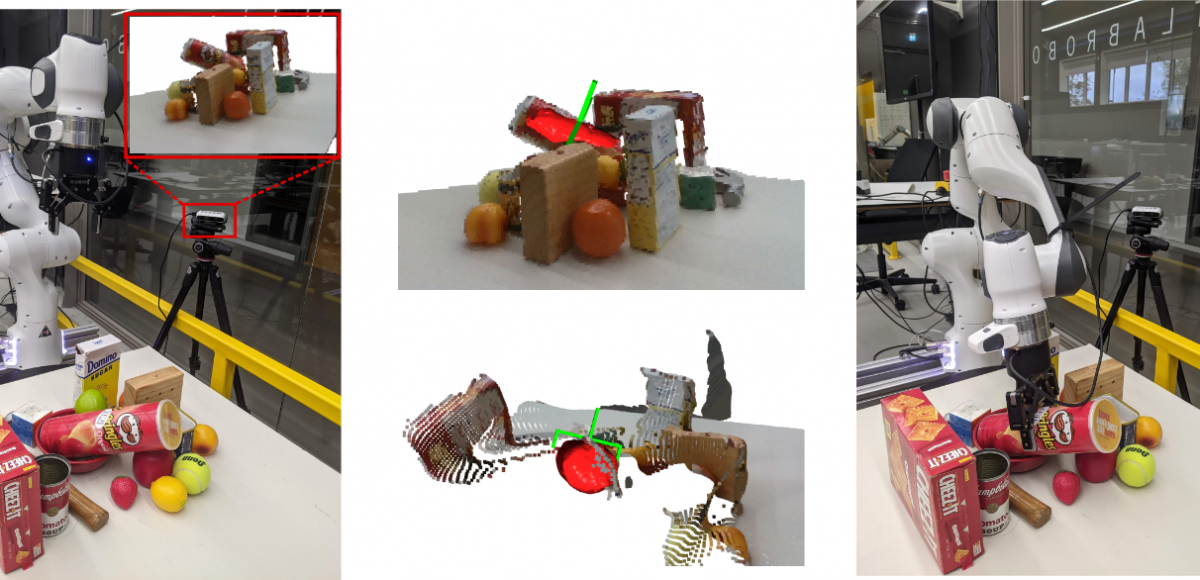

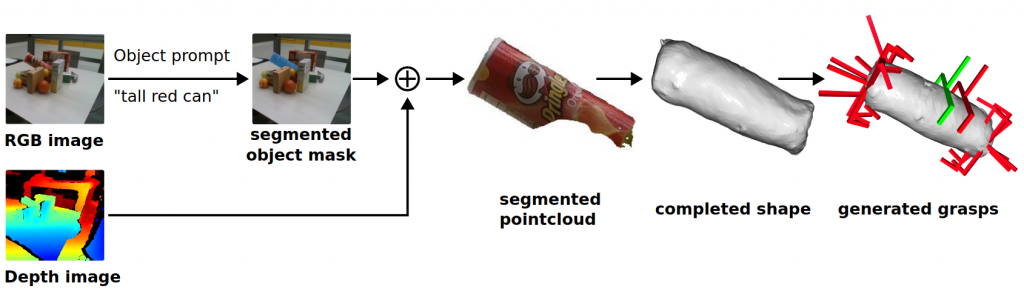

Abstract: In vision-based robot manipulation, a single camera view can only capture one side of objects of interest, with additional occlusions in cluttered scenes further restricting visibility. As a result, the observed geometry is incomplete, and grasp estimation algorithms perform suboptimally. To address this limitation, we leverage diffusion models to perform category-level 3D shape completion from partial depth observations obtained from a single view, reconstructing complete object geometries to provide richer context for grasp planning. Our method focuses on common household items with diverse geometries, generating full 3D shapes that serve as input to downstream grasp inference networks. Unlike prior work, which primarily considers isolated objects or minimal clutter, we evaluate shape completion and grasping in realistic clutter scenarios with household objects. In preliminary evaluations on a cluttered scene, our approach consistently results in better grasp success rates than a naive baseline without shape completion by 23\% and over a recent state of the art shape completion approach by 19%.

Citation

@inproceedings{kashyap2025singleview,

author = {Abhishek Kashyap and Yuxuan Yang and Henrik Andreasson and Todor Stoyanov},

title = {Single-View Shape Completion for Robotic Grasping in Clutter},

booktitle = {Proceedings of the 13th International Conference on Robot Intelligence Technology and Applications (RiTA 2025)},

year = {2025},

series = {Lecture Notes in Networks and Systems},

publisher = {Springer},

address = {London, United Kingdom},

month = dec,

url = {https://amm.aass.oru.se/shape-completion-grasping}

}